And the Hackathon’s Second Place Goes to: 3D Model for Robots

Team members Aviv, Lahav, Asher, Itay, and Eyal developed a system that allows one to see robots in action on a 3D image and gain accurate input on their position

“The Hackathon has been a really crazy experience for me,” says Aviv Gabay, 19. “It’s my first hackathon, so I wanted to get there with confidence in my knowledge, and I had so much fun collaborating with my team members in the process, getting to know new people and new work environments, and experiencing new subjects and stuff I hadn’t necessarily studied at university. I’m happy we’ve managed to get there, with this group. I’m really proud of my group.”

מודל תלת מימדי לרובוטים

מודל תלת מימדי לרובוטים

Gabay is a 3rd-year Computer Engineering undergraduate. Ahead of the Hackathon, he had teamed up with four other friends, all Academic Reserve trackers in the combined engineering and physics program: 4th-year students Asher Lajimi, Itay Elmaliah, and Eyal Itzhayek, and 3rd-year student Lahav Dabah. “We were a bit late enrolling in the Hackathon, just a week ahead, and as it wouldn’t be the first hackathon for some of the team, while for others it was the last opportunity, we really wanted to take it seriously and give it our best,” he says. “The week before the Hackathon, we started brainstorming and checking what we wanted to go for. Initially, we thought we’d focus on a solution that would take place on the ground and on specific aids, for defusing or eliminating landmines, for example. The day before the Hackathon, we changed course and decided to offer a general enhancement for the means presented to us in the conference, while focusing on the present robot used by the Yahalom Unit. The robot has four cameras: front, rear, 360°, and upward facing, which makes it awkward to figure out its position in space. We were looking for a way to improve that. We decided to create a 3D module to module the surrounding environment as seen by the robot, so we could paste this space onto a computer environment model. This meant creating a third-body camera for the robot, allowing us to see it mid-screen, complete with whatever was taking place around it, just as we might see computer game characters. This gives us a much better insight into the robot’s position and makes for an easier orientation.”

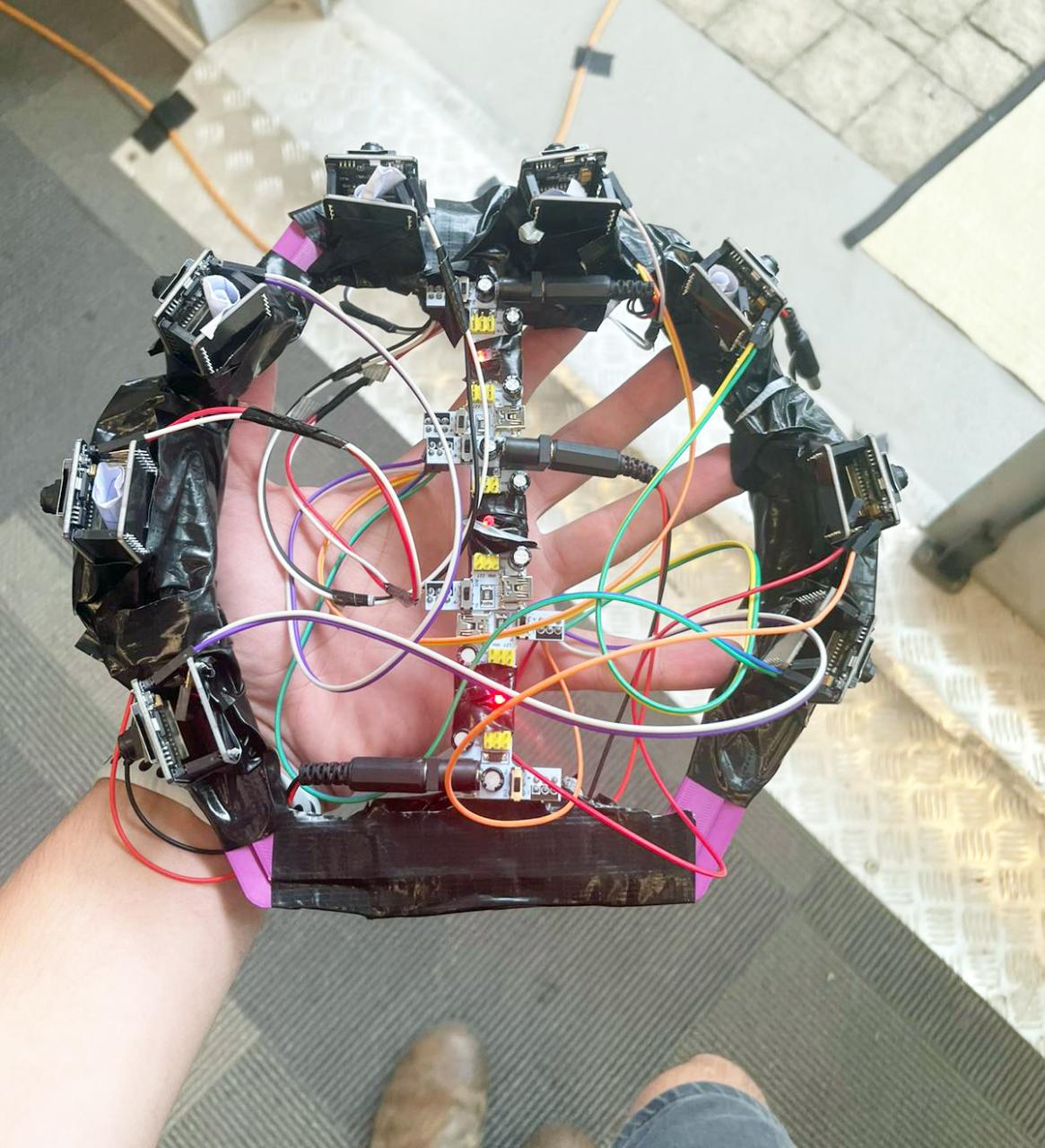

Their system comprised a hexagonal physical model with circularly mounted, outward-facing cameras and a code that processed their input and translated it into a panoramic image of the surrounding space. “We used the data received from the cameras to build a 3D spatial image, with a graphics engine. Whenever a new panoramic image was generated, we pasted it across our 3D space. This allowed us to place our object, the robot, mid-screen, surrounded by everything it was seeing in practice. The whole thing was transmitted to the operator’s monitor, allowing them to choose whether to see through the eyes of the robot - whatever the robot was seeing - or the 3D image.”

The five team members had a division of labor in place. “Lahav was in charge of building the code that pulled together the camera images. Itay worked on the code that made sure every camera uploaded its code to a server, so that it could be accessed, and also wired the cameras. I was in charge of putting together the 3D space, with the option of viewing it, so that you could move around with the mouse and have the character look wherever the operator wished it to. Eyal was responsible for the physical model and for mounting the cameras, while Asher wrote the code that updates the space in the 3D environment with the new panoramic images in real time; he also gave us a hand wherever needed and was generally involved in every aspect of the project. Everyone did their share, but we collaborated and worked together, particularly towards the end. We also had considerable help from our mentors, particularly Ran Levy, who was really helpful when it came to the cameras’ code, or Liri Benzinou, who helped us with the small hours, particularly with the camera servers, as well as representatives from the unit and faculty. In general, everyone was more than willing to help, you could it was good intentions all around, for which we are really grateful.”

The project developed by the team earned them the second spot in the Hackathon, with a prize of 5,000 NIS. “But beyond the cash prize,” declares Aviv, “the important part for us was working together as a group, with the great gratification of achieving a running product that actually works, after all the effort we put in.”

Last Updated Date : 29/06/2023